All modern web application frameworks need replaceable application components. Reasons for this requirement are plenty. Some applications will share common functionality with other applications such as identity management. However, having the ability to extend this functionality or replace it entirely is absolutely crucial. Technology requirements change too fast to assume that a single implementation of some feature will ever be sufficient for any significant length of time. Moreover, tools that are better for the job that your component currently does will emerge and developers need a way to exploit the benefit of these tools without having to hack the core system.

Trac is a

Python web-framework in it's own right. That is, it implements several framework capabilities found in other frameworks such as

Django and

TurboGears.

Trac is highly specialized as a project management system. So, you wouldn't want go use

Trac as some generalized web framework for some other domain. Project management for software projects is such a huge domain by itself that it makes sense to have a web framework centered around it.

Trac defines it's own component system that allows developers to create new components that build on existing

Trac functionality or replace it entirely. The component framework is flexible enough to allow loading of multiple format types; eggs and

.py files. The component loader used by the

Trac component system is in fact so useful that it sets the standard for how other

Python web frameworks should load components.

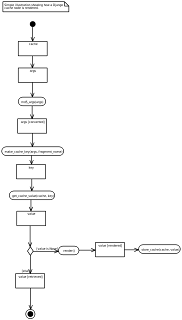

The

Trac component framework will load all defined components when the environment starts it's HTTP server and uses the

load_components() function to do so. This function is defined in the

loader.py module. This

load_components() function can be thought of as the aggregate component loader as it is responsible for loading all component types. The

load_component() function will accept a list of loaders in a keyword parameter. It uses these loaders to differentiate between component types. The parameter has two default loaders that will load egg components and

.py source file components. The

load_components() function will also except an extra path parameter which allows the specified path to be searched for additional

Trac components. This is useful because developers may want to maintain a repository of

Trac components that do not reside in site-packages or the

Trac environment. The

load_components() function also needs an environment parameter. This parameter refers to the

Trac environment in which the loader is currently executing. This environment is needed by various loaders in order to determine if the loaded components should be enabled. This would also be a requirement of a custom loader if a developer was so inclined to write one. There is other useful environment information available to new loaders that could potentially provide more enhanced functionality.

As mentioned, the

load_components() function specifies two default loaders for loading

Trac components by default. These loaders are actually factories that build and return a loader function. This is done so that data from the

load_components() function can be built into the loader function without having to alter the loader signature which is invoked by the

load_components() function. This offers maximum flexibility. The first default loader,

load_eggs(), will load

Trac components in the egg format. This does so by iterating through the specified component search paths. The

plugins directory of the current

Trac environment is part of the included search path by default. For each egg file found, the working set object, which is part of the

pkg_resources package, is then extended with the found egg file. Next, the distribution object, which represents the egg, is checked for

trac.plugins entry points. Each found entry point is then loaded. What is interesting about this approach is that it allows subordinate

Trac components to be loaded. This means if there is a found egg distribution containing a large amount of code and a couple small

Trac components to be loaded, only the

Trac components are loaded. The same cannot be said about the

load_py_files() loader which is the second default loader provided by

load_components(). This function works in the same way as the

load_eggs() function in that it will search the same paths except instead of looking for egg files, it looks for

.py files. When found, the loader will import the entire file, even if there is now subordinate

Trac components within the module. In both default loaders, if the path in which any components were found is the plugins directory of the current

Trac environment, that component will automatically be enabled. This is done so that the act of placing the component in the plugins directory also acts as an enabling action and thus eliminating a step.

There are some limitations with the

Trac component framework. The

_log_error() function nested inside the

_load_eggs() loader shouldn't be a nested function. There is no real rationale for doing so. Also, loading

Python source files as

Trac components is also quite limiting because we loose any notion of subordinate components. This is because we can't define entry points inside

Python source files. If building

Trac components, I would recommend only building eggs as the format.